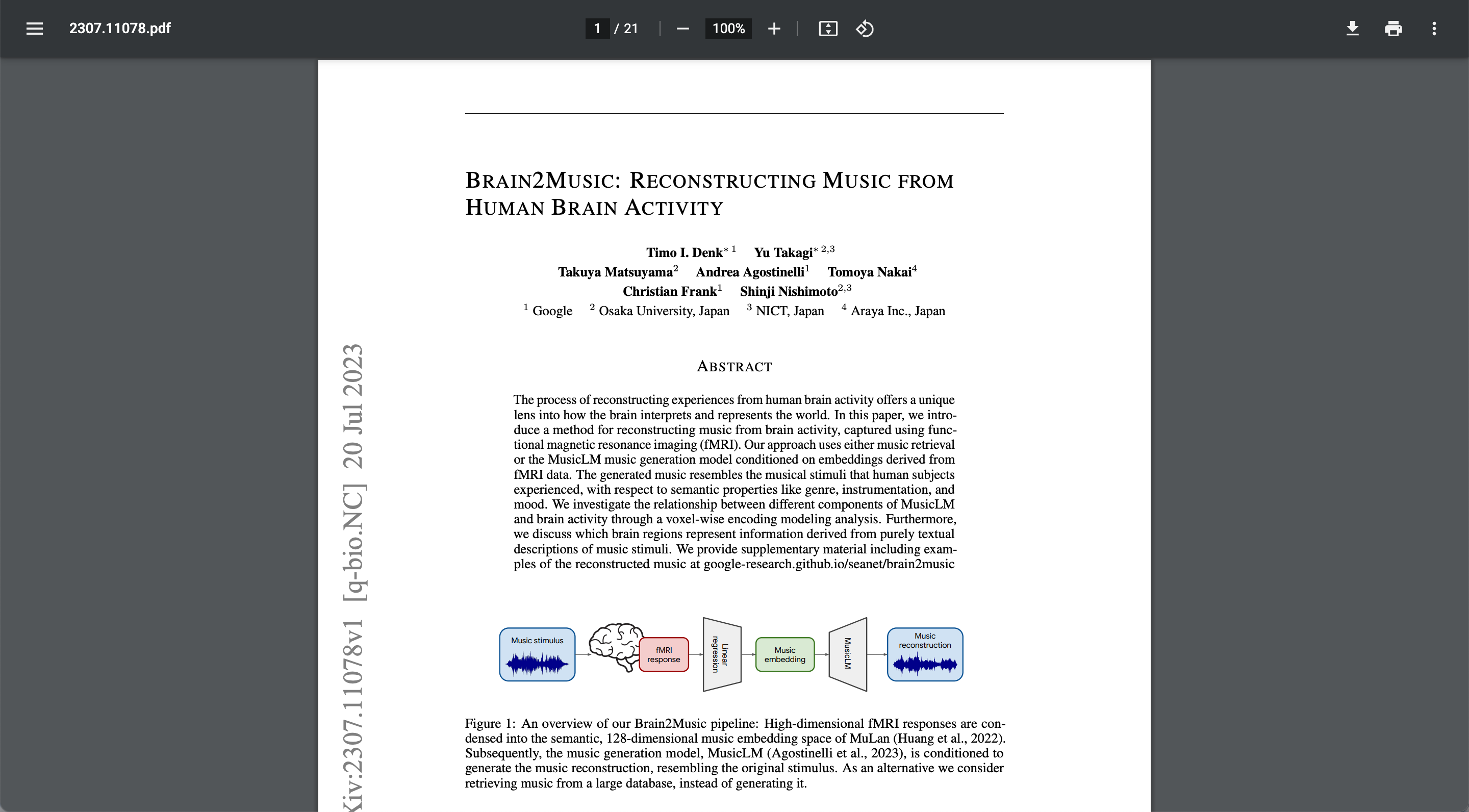

Brain2Music: Reconstructing Music from Human Brain Activity

In March 2023, Yu Takagi and Shinji Nishimoto, published their paper on image reconstruction from human brain activity. The research made the news. Often shared was this image in which one can see an image stimulus (what test subjects saw while their brain activity was recorded) juxtaposed to the reconstruction:

Around the same time, we had just published the music generation model MusicLM. Conceptually, both image and music generation models share their reliance on a joint music/image & text embedding model. In the image case it is called CLIP and in the music case it is MuLan.

My manager just asked “Why don’t we do the same for music?” After emailing Yu and Shinji it became clear that the interest in that research was mutual and we kicked off the project. A few months later we published our Brain2Music paper, which is briefly explained below. The full author list is: Timo I. Denk, Yu Takagi, Takuya Matsuyama, Andrea Agostinelli, Tomoya Nakai, Christian Frank, Shinji Nishimoto

You know these magnetic resonance imaging (MRI) machines that can be used to inspect various body parts, such as a knee? At Osaka University in Japan they have a few of these, here is a photo of one of them:

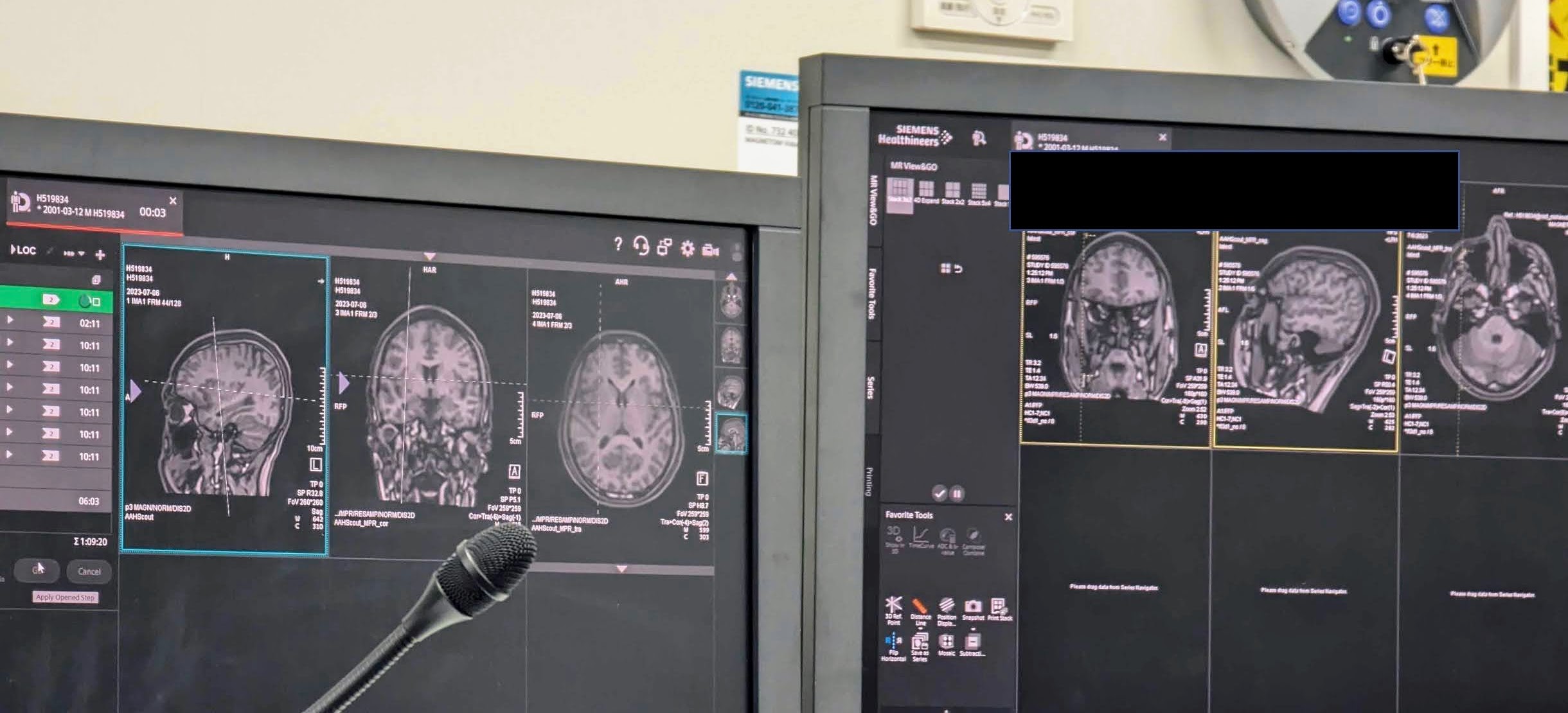

Such devices can also be used to measure blood flow in the brain. This is called function MRI (fMRI). When neurons in a certain brain region are active, they require more oxygen and such increased neural activity is followed by a slight increase in blood flow a few seconds later. Below is a photo I took in the operation room, while such an fMRI scan was carried out.

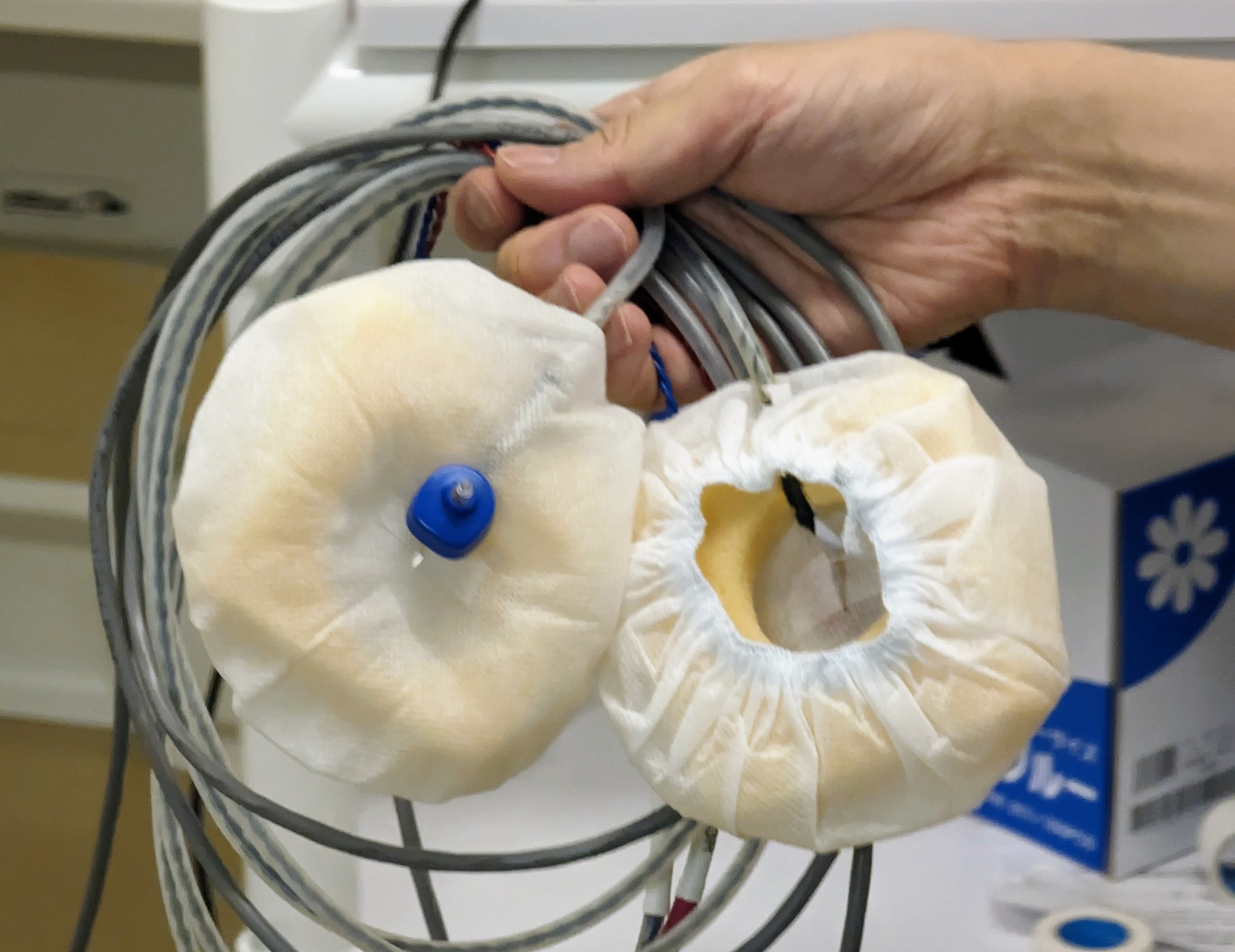

Because of the strong magnets which MRI machines are relying on, absolutely nothing metallic can enter the tube. To stimulate test subjects with music while their brain is being scanned one can use special headphones designed to be MRI compatible. They look something like that:

With all these tools in place, the neuroscience researchers at Osaka University collected brain activity data of five test subjects while they were listening to music. The resulting dataset was published by Nakai et al. (2022).

We used this dataset to predict an embedding (think of that as a low-dimensional representation of music) that our music generation model can convert back into a music track. The resulting music reconstructions can be compared to the original stimuli. It is pretty similar, just like the reconstructed images were. You are invited to listen to some on this website: google-research.github.io/seanet/brain2music

The project was incredibly fun to work on. I liked digging into an entirely new domain, neuroscience, and learning from the folks in Osaka. Working together with them in Japan was my first trip to South-East Asia. Below is a photo of us going out for dinner after work.

An interesting future research direction would be to attempt the reconstruction of music that is purely imagined. For that test subjects would think about music rather than listening to it. That would qualify for a Mind2Music paper!

Public Responses to Brain2Music

Our paper was shared on several platforms. Some are listed here:

- Rowan Cheung on X: “This is incredible. AI can see what you are listening to."twitter.com/rowancheung/status/1683482203848622080

- @AK on X: “Brain2Music: Reconstructing Music from Human Brain Activity” twitter.com/_akhaliq/status/1682247030222012419

- @ai_database on X: twitter.com/ai_database/status/1682573044601200646

- ZDNET: Google’s new AI model generates music from your brain activity. Listen for yourself

- Live Science: Google’s ‘mind-reading’ AI can tell what music you listened to based on your brain signals

- Tech Times: Google AI Sound Generated ‘MusicLM’ Creates Sound Based on Brain Activity

- Synced: Brain2Music: Unveiling the intricacies of Human Interactions with Music

- MarkTechPost: Meet Brain2Music: An AI Method for Reconstructing Music from Brain Activity Captured Using Functional Magnetic Resonance Imaging (fMRI)

- The Indian Express: Google’s Brain2Music AI interprets brain signals to reproduce the music you liked

- TechXplore: Brain2Music taps thoughts to reproduce music

- Reddit r/singularity: _Brain2Music: Reconstructing Music from Human Brain Activity

- SPIEGEL (German): KI-generierte Musik aus Gehirnströmen - Radio Google, Radio Gaga

- Abema (Japanese): abema.tv/video/episode/89-71_s10_p5236